Project Standalone

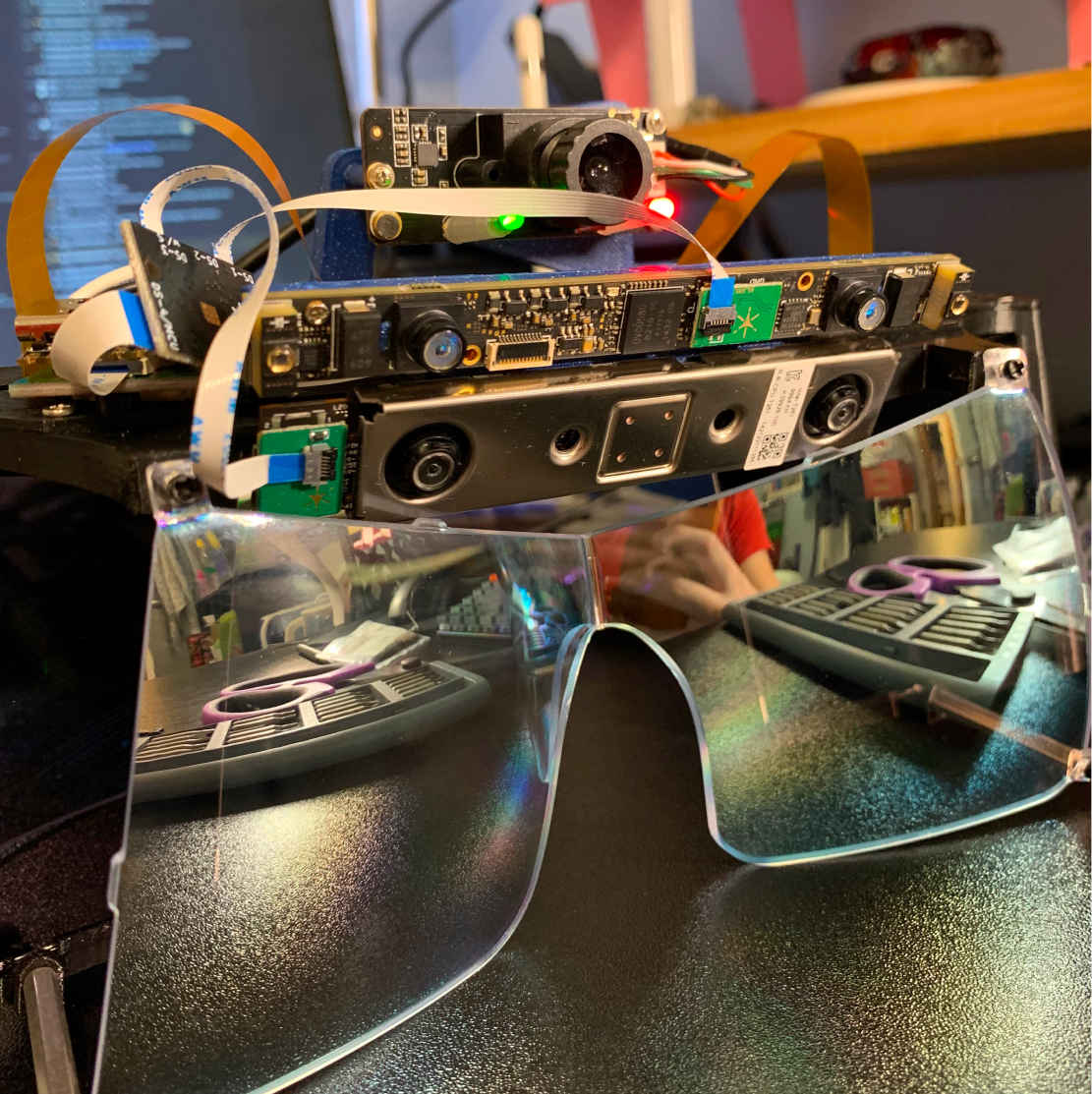

Open hardware and software for the first standalone Northstar-class headset.

Project Standalone is a brand-new, long-term effort at UW Reality Labs to build a fully open, standalone XR system with open hardware and software.

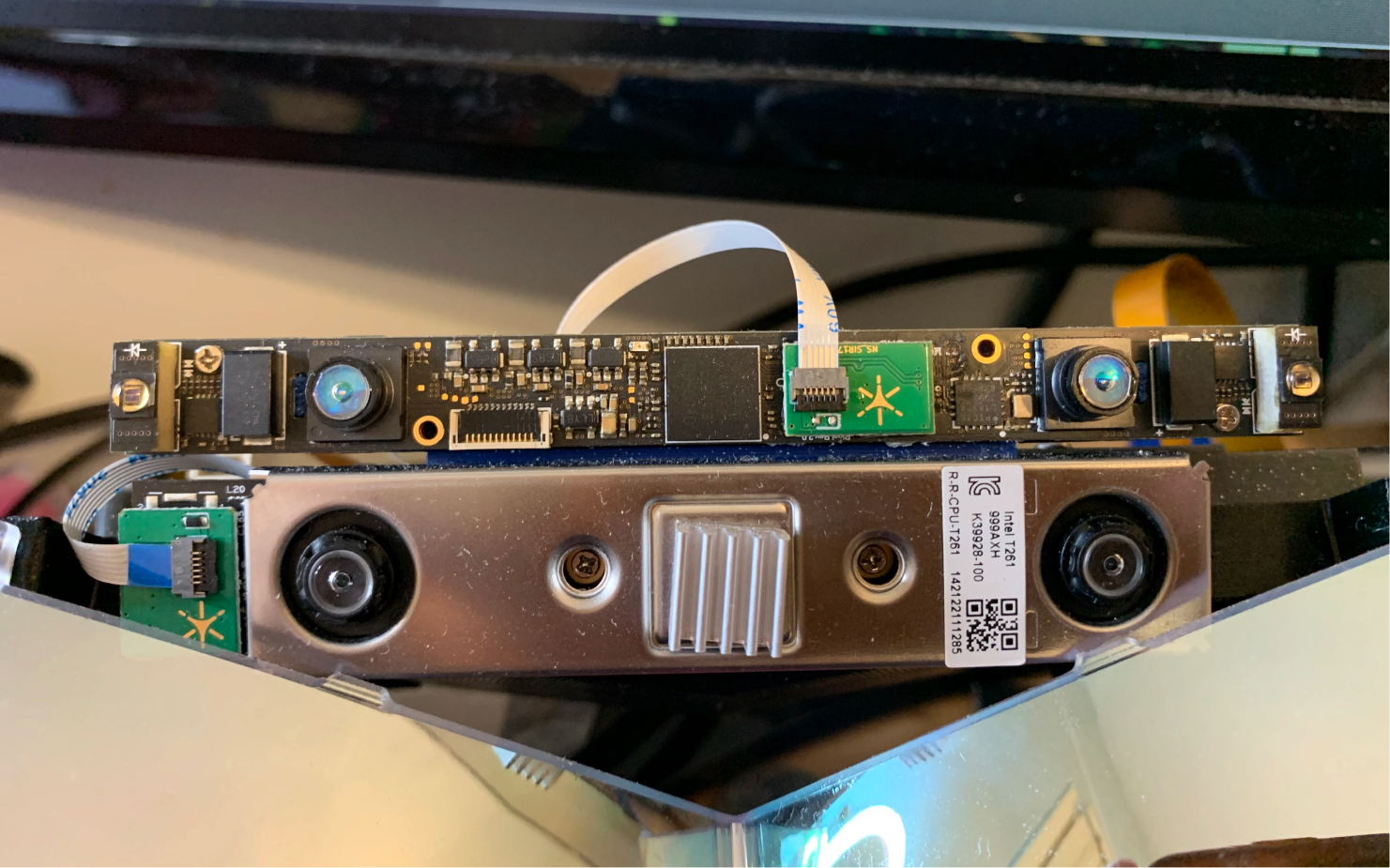

We’re taking inspiration from Project Northstar, a well-known open-source mixed-reality headset originally developed by Leap Motion. Northstar is respected for its wide field of view, high-resolution displays, and optical see-through AR design, and it remains one of the most capable open source XR hardware platforms available. Currently, the Northstar headset requires an external device (e.g., a PC) to be functional. Though this was a common requirement in older headsets, newer models like the Meta Quest 3 have dropped this setup and are now standalone. That is, they no longer require any external hardware other than the headset itself.

So, at UWRL, we’ve decided to take it a step further and ask: How can we make Project Northstar standalone? Though seemingly straightforward, this has become an involved project requiring effort in both hardware design and software development.

We’re not stopping at the hardware level; we’re building the software too. Our goal is to build the first open, XR software stack that brings together the pieces people need to actually build and experiment with immersive systems.

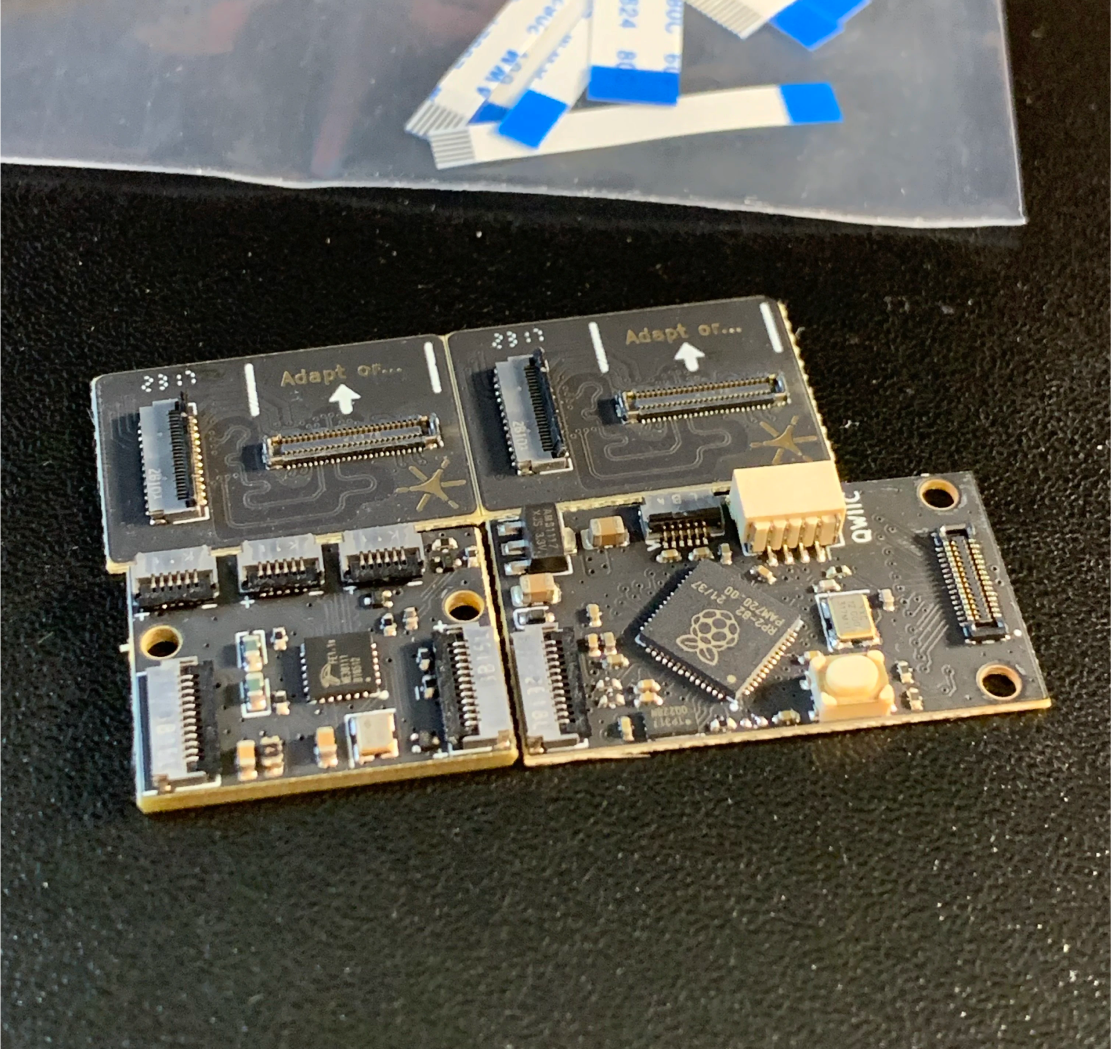

This work is ambitious by design. We are building a Linux-based system that runs entirely on the headset, powered by embedded compute, custom drivers, and an OpenXR runtime. On top of that, we’re developing a custom XR launcher and user interface in Godot, along with support for WebXR experiences, so the headset can boot directly into immersive environments without a PC.

If you join Project Standalone, you’ll be working on real systems, not demos or abstractions. Some members will focus on operating systems and systems-level work. Others will work closer to user-facing XR software, including building the system launcher and shell in Godot, designing XR windowing behaviour, and shaping how users interact with the headset (i.e., gesture UX; universal gestures is not the only team that touches interaction!).

The kind of work done here - XR runtimes, embedded graphics, hardware integration- is typically locked behind industry roles or proprietary platforms. The scope is broad by necessity, and contributors should expect to develop a rare understanding of how XR systems actually work in practice.

Why Standalone?

Standalone headsets remove the need for tethered PCs and open up new ways to prototype XR systems. We are building a self-contained platform that still stays open, modifiable, and research-friendly.

What You Might Build

Expect to dive into OpenXR runtime, custom drivers, and a Godot-based launcher shell. We are also exploring WebXR boot flows and next-generation interaction models beyond standard controllers.