Universal Text

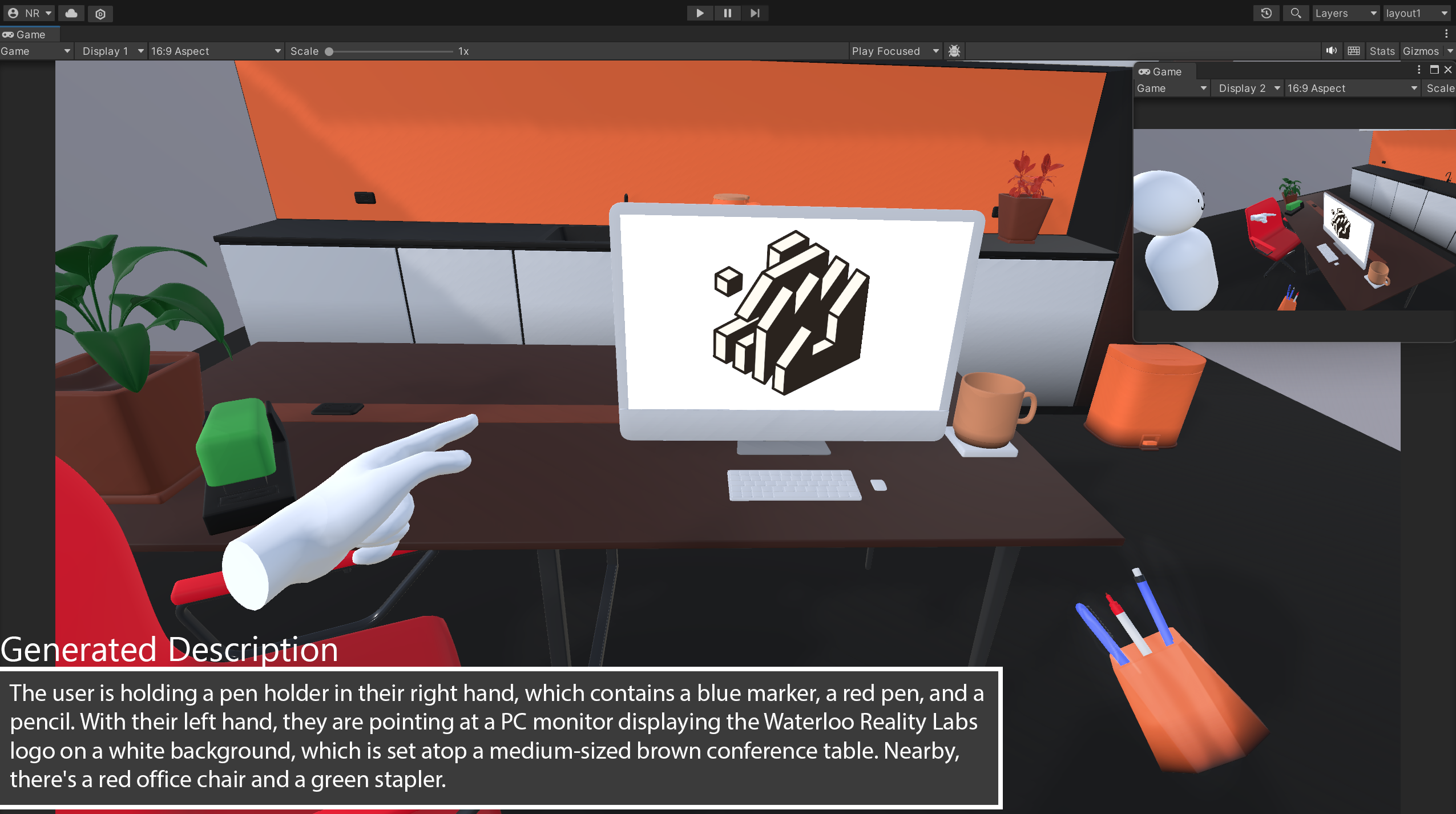

Building a dynamic textual representation of a user’s virtual environment and their interactions within it.

When you prompt a virtual assistant (for example Meta AI on Raybans glasses), what happens when you ask “What am I looking at”? Currently, the pipeline seems rather simplistic. The cameras on the glasses take a picture, that picture is passed through a model that can assign text labels to images, and finally that text label describing the whole image is passed into an LLM. This process, especially the step where a model must describe everything in an image using words, is often inaccurate.

What if we could build a system that…

…provides a richer text summary of a virtual environment, complete with descriptions of how objects compose each other, are placed within/next to/on top of each other?

…also describes how you, the user, is interacting with that environment at any moment? Could we assign additional text to describe that you are pointing at a specific object, or reaching out for one?

…runs in real time, that is, can constantly update every frame to provide an updated description. That way, we wouldn't have to wait for text generation, and we could create a live captioning system? …runs entirely on-device, meaning this information is never sent to the cloud?

If we created this, we could use it for…

…in-application virtual assistants that make use of a rich text summary for high-accuracy responses

…virtual science labs where users could receive detailed auto-generated scientific explanations about tools and objects they interact with

…dynamic VR scene descriptions for the visually impaired, describing layout and objects, or even what they're holding, pointing at or nearby to

…and so much more

Universal Text aims to explore this. We are creating a structured software package for Unity that allows for real time captioning of a VR user's interactions with their virtual environment. In other words, tools provided by our package aim to describe in natural language "what's happening" in a VR application at any moment in time, as if recounted by a third party observer. This textual description will be rich in detail and generated on-the-fly, providing seamless integration of tutorials, live captioning for accessibility, or virtual assistants into VR applications.

GitHub