Universal Gestures

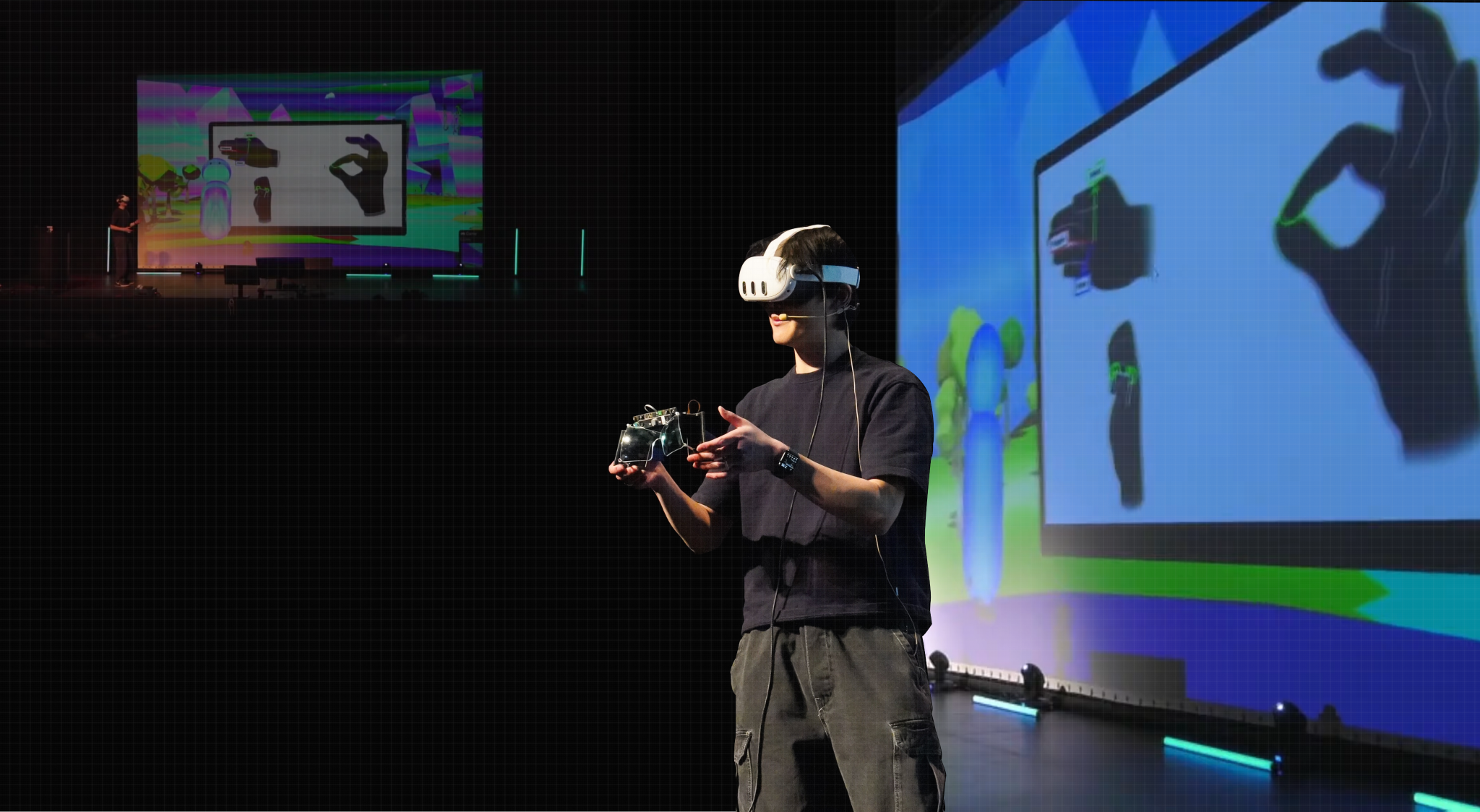

Software package for Meta Quest headsets geared towards developers of mixed-reality video games and apps that use hand tracking

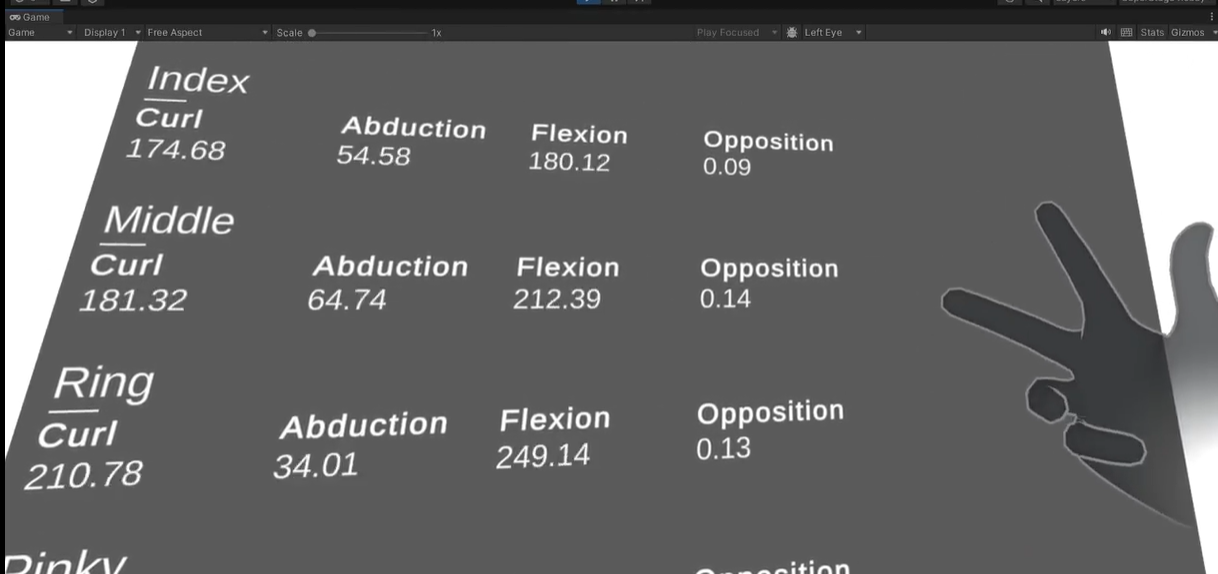

For developers who build using Unity, the most popular editor for creating immersive experiences, Meta provides the XR All-in-One SDK (UPM). This SDK enables developers to interface with the sensors on Quest headsets, and easily enable hand tracking functionality within their app. However, the functionality surrounding detecting specific hand poses is limited by the limited customization to the curl and flexion settings of the fingers. Additionally, the existing package does not take into account the relative position of your hands to one another.

The goal of Universal Gestures is to create an importable Unity package which expands and simplifies the hand gesture recognition system provided by Meta. Instead of using an on-or-off approach to finger poses, we will take the float values given by the sensors on the headset for finger positions and apply a Machine Learning approach. By collecting a training set of popular hand gestures, we will train neural networks to classify them. The output of these trained neural networks will be accessible to scripts that we create in C#, which will finally be attachable to GameObjects within Unity.

From the developers perspective, all they have to do is import our package, attach the “UWRL Hand Pose Detection” script to an object within their Scene, choose which gesture they want to recognize, and specify a function to be run when it is recognized. Just like that, the difficult part of recognizing a gesture is handled for them—they can immediately get to work on their creative project.

Tech Blog